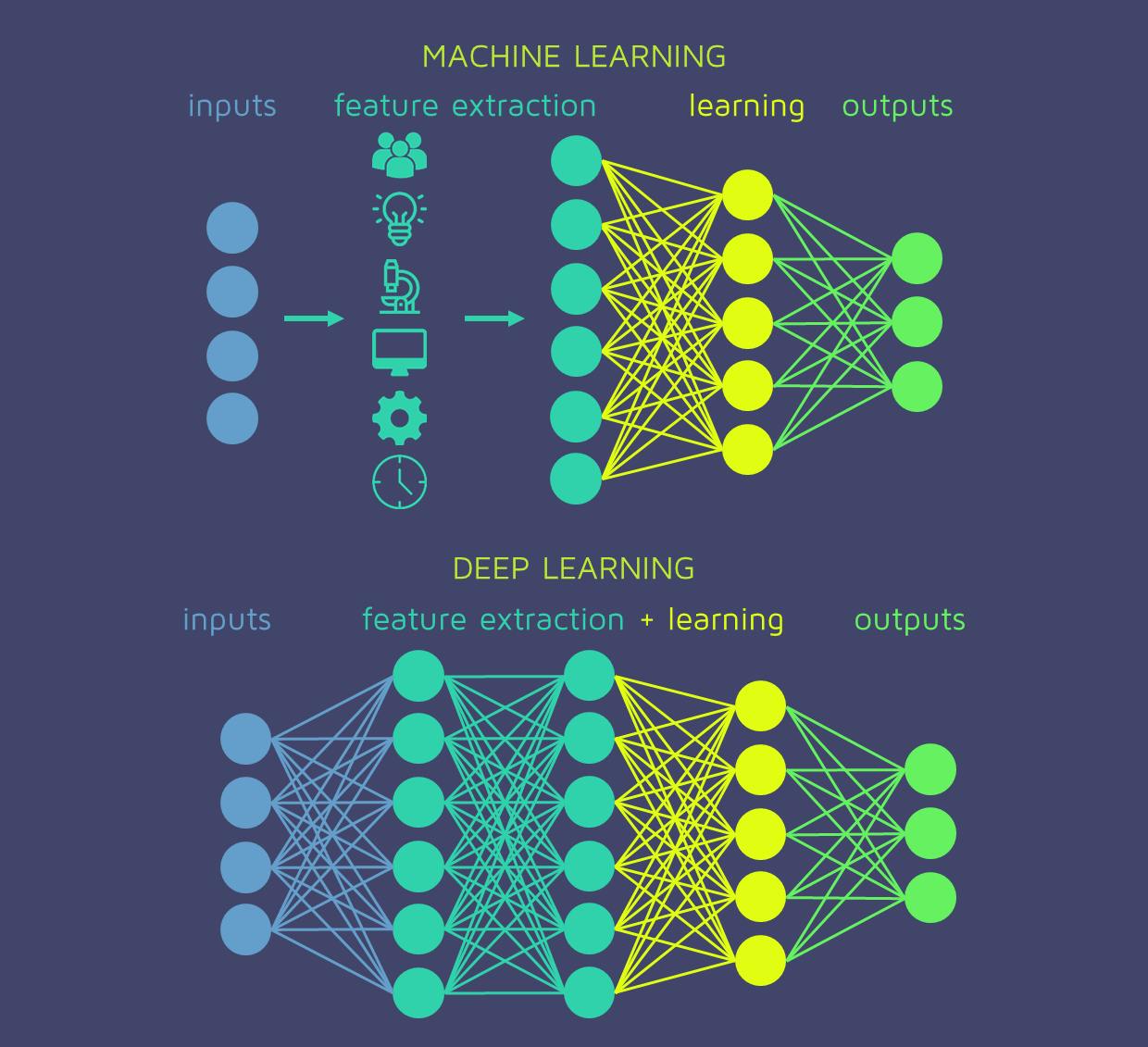

Deep Learning (DL) approaches have revolutionized various areas over the last several years, including computer vision and natural language processing. Usually, the data used by these applications is specified over a 2-dimensional grid, 3-dimensional volume, or other structures over n-dimensional Euclidean space. Convolutional neural networks (CNN) and multi-layer perceptron (MLP) models, two common DL approaches, are unable to capture the structure of data in non-Euclidean space.

The interactions between data items in non-Euclidean domains are captured by some types of data structures, such as graphs. The Internet, social networks, web-scale networks, biological networks, and economic networks are well-known real-world instances of graphs. By using techniques like triangle counting, breadth-first search (BFS), depth-first search (DFS), and linked components, among others, traditional methods for identifying the structure of data in these networks are referred to as "graph analytics" in computer science.

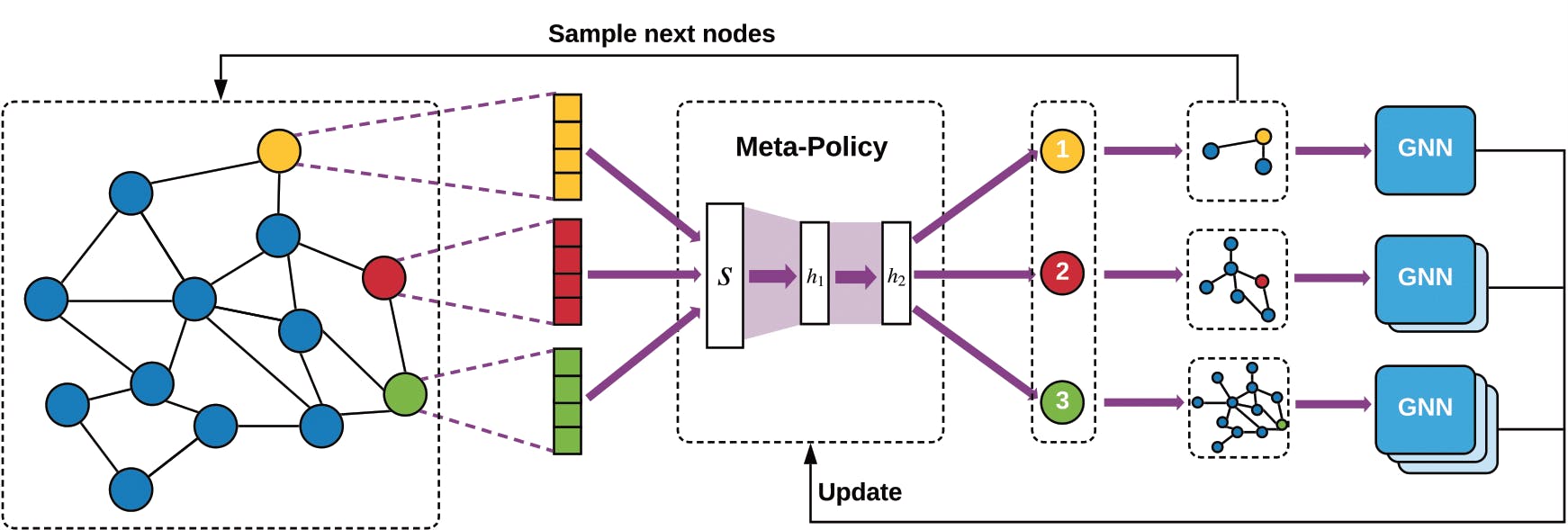

A contemporary, data-driven method for examining big graphs and figuring out their structure is called graph neural networks (GNN). A GNN is made up of three essential parts: I an embedding, which is

- Information associated with each node (and optionally each edge) in the graph;

- A recursive message-passing aggregation algorithm that combines vertex/edge embeddings in creative ways;

- A neural network model (typically an MLP) that iteratively learns the graph structure using conventional gradient-descent training algorithms used in DL.

It is impossible to overstate the significance of GNNs in the modern world because they have the ability to analyze intricate relationships at all scales, from the macro level of the Internet or social media networks to the micro level of human protein molecules and their interactions with drug molecules to help with drug discovery.

It is necessary to describe tasks like node classification, link prediction, graph classification, community discovery, and network similarity in order to perform such studies on graphs using machine learning methods. To have the "best of both worlds"—accuracy of accurate graph analysis paired with the speed of training neural networks—the GNN technique may supplement rather than replace conventional graph analytics.

Flavors, Primitives, and Process in GNN Training

Full-batch training and mini-batch training using sampling approaches are the two main types of GNN training. A data scientist may pick any flavour for their application depending on the kind of graph data. Because each vertex is linked to a dense feature vector during full-batch training, a lot of memory is needed to store both the embeddings and their gradients. This is because all nodes in the training dataset engage in computation during each iteration. In contrast, mini-batch training randomly chooses a set of vertices and their associated sampled neighbourhoods from the training dataset. If the vertex set size is small and the size of the sampled neighbourhood around each vertex is constrained, mini-batch training has a lower memory capacity requirement. The two primary primitives used in the calculation, regardless of flavour, are I Aggregation and (ii) Update.

Optimizations in Intel Xenon with GNN

The well-known Deep Graph Library (DGL) implements graph data-structures (such as the Adjacency Matrix) in common Coordinate list (COO), Compressed Sparse Row (CSR), and Compressed Sparse Column (CSC) formats.

- It also offers a rich collection of abstractions and built-in functions to implement different Aggregation primitive variants.

- It employs PyTorch's autograd feature to create automated differentiation, among other neural network frameworks.

- It carries out the essential C++ functionality for manipulating graphs and aggregating primitives.

Initial vertex features are represented by DGL as a PyTorch Tensor object, allowing AGG operations as well as other neural network operations on them. The reasoning above and The graph should be represented as an adjacency matrix and the vertex characteristics as a dense PyTorch Tensor object.

Conclusion

An important new type of AI tasks is Graph Neural Networks. This article shows that when the underlying software primitives are well-optimized to make use of the available architectural characteristics, current Intel Xeon processors may give excellent performance for this class of tasks.

- Distributed GNN training presented a method to expand GNN performance from a single node to numerous nodes as we continue to concentrate on this crucial task.

- Modifying that strategy and trying to expand optimization efforts beyond GraphSAGE to include additional kinds of GNNs.